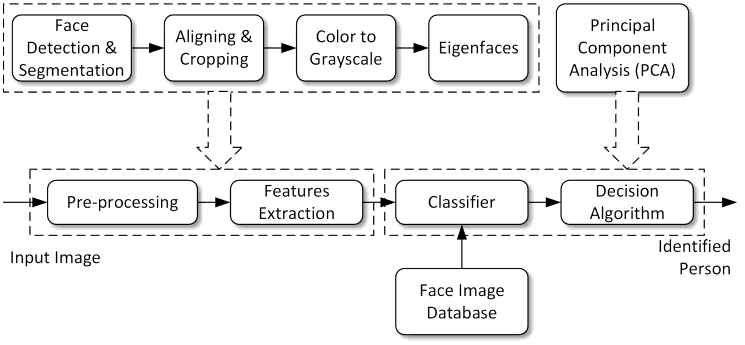

If you have been working with computer vision algorithms, then you are well-aware of how the performance drops for real-time applications on an average machine. The way we measure performance quantitatively is by the frame rate. Take face detection, for example, i.e., you want to monitor a scene continuously, and as soon as a human face appears in the scene, a bounding box is drawn around that face. Let’s break this down. First, you send the video stream to the server where the video is processed and analyzed. The server receives the video stream, arranges it frame-by-frame, and starts looking for faces in each frame using any standard or application-tuned algorithms or filters. Once detected, the coordinates for the face are returned, and a bounding box is drawn. This process for a machine is definitely not as easy as it sounds; it is quite complicated overall. The complexity might increase with the task and the algorithms used.

So, clearly their performance sufferse on edge devices where is there is quite limited compute power. Whats is the way around this? Enter VPU!

VPU stands for the Vision Processing Unit, which is made for the sole purpose of boosting and handling vision algorithms for edge applications. One of the many popular VPUs is the Intel Neural Compute Stick (NCS), formerly known as the Myriad Compute Stick. It was made by Movidius, which was later acquired by Intel. The latest one on the market is Intel Neural Compute Stick 2. It costs around 10k INR on amazon as of Feb 2020.

Using this stick you are can Develop, fine-tune, and deploy convolutional neural networks (CNNs) on low-power applications that require real-time inferencing.

There is good support for heterogeneous execution across computer vision accelerators—CPU, GPU, VPU, and FPGA—using a common API along with Raspberry Pi hardware support.

Visit their wesite for all the information.

This NCS VPU works in the association of intel’s well documented Open-Vino toolkit. I’ll be writing on the Open-Vino toolkit as well soon. Summing up this quickie, now you can build any ML-DL model, optimize it with model optimizer from the open-vino toolkit(generating .xml and .bin files), and deploy it on the NCS with maybe Rpi or any other hardware. The face detection algorithm can now be implemented with ease on any low-power edge detection.

As always, thanks for reading 🙂 Let me know in the comment section where you plan to use this VPU. Suggestions for more topics to cover are always welcomed. Signing off!